Refactor API to remove in-place centroids, and update FFIMachine [do-NOT-merge]#54

Refactor API to remove in-place centroids, and update FFIMachine [do-NOT-merge]#54christinahedges wants to merge 10 commits intoSSDataLab:masterfrom

Conversation

|

There's a notebook here which shows how to use this PR for getting the TESS photometry out... |

jorgemarpa

left a comment

jorgemarpa

left a comment

There was a problem hiding this comment.

In the near future, we need to merge the changes from #60 including the perturbation API. I think after that the only 'big' changes to machine.py are the way to compute the source_mask and centroids.

| @property | ||

| def dx(self): | ||

| """Delta RA, corrected for centroid shift""" | ||

| if not sparse.issparse(self.dra): | ||

| return self.dra - self.ra_centroid.value | ||

| else: | ||

| ra_offset = sparse.csr_matrix( | ||

| ( | ||

| np.repeat( | ||

| self.ra_centroid.value, | ||

| self.dra.data.shape, | ||

| ), | ||

| (self.dra.nonzero()), | ||

| ), | ||

| shape=self.dra.shape, | ||

| dtype=float, | ||

| ) | ||

| return self.dra - ra_offset |

There was a problem hiding this comment.

This is the core change to avoid in-place operation. Does this adds overhead when calling self.dx, I don't think it'd matter much though, only for the case of large sparse data.

| def _update_delta_arrays(self, frame_indices="mean"): | ||

| if self.nsources * self.npixels < 1e7: | ||

| self._update_delta_numpy_arrays() | ||

| else: | ||

| self._update_delta_sparse_arrays() |

There was a problem hiding this comment.

I think this should be renamed to just _create_delta_*_arrays() (or just _delta_*_arrays()) there's no "update" happening there.

The frame_indices="mean" argument I think is unnecessary now.

| if frame_indices == "mean": | ||

| frame_indices = np.where(self.time_mask)[0] |

There was a problem hiding this comment.

This isn't doing anything in the function.

| plot=False, | ||

| ): | ||

| """Find the pixel mask that identifies pixels with contributions from ANY NUMBER of Sources | ||

| def _get_source_mask(self, source_flux_limit=1): |

There was a problem hiding this comment.

this new version of _get_source_mask looks "simpler" than the original one, I mean, with less tunable params, which I like.

Is iterating 2 times good to converge into a solid source_mask?

There was a problem hiding this comment.

A diagnostic plot for this one would be great, something to check we are capturing well the f, r dependency.

| # mask out non finite values and background pixels | ||

| k = (np.isfinite(wgts)) & ( | ||

| self.uncontaminated_source_mask.multiply(self.flux[t]).data > 100 | ||

| def _get_centroid(self, plot=False): |

There was a problem hiding this comment.

we need short documentation here

There was a problem hiding this comment.

Now, this method computes a single centroid value for all frames. Do we want to also have centroids in each frame?

| return | ||

|

|

||

| def _remove_background(self, mask=None): | ||

| def _remove_background(self, mask=None, pixel_knot_spacing=10): |

There was a problem hiding this comment.

we can get rid of photutils dependency

| thumb = np.min(self.flux, axis=0).reshape(self.image_shape) | ||

| gthumb = np.hypot(*np.gradient(thumb)) | ||

| mask = ( | ||

| ~sigma_clip( | ||

| np.ma.masked_array(gthumb, gthumb > 500), | ||

| sigma=3, | ||

| cenfunc=lambda x, axis: 0, | ||

| ).mask | ||

| ).ravel() | ||

| self._remove_background(mask=mask) |

There was a problem hiding this comment.

I think this can happens after super.__init__ now.

| pixel_mask = self.non_sat_pixel_mask & self.non_bright_source_mask | ||

| self.rough_mask = self.rough_mask.multiply(pixel_mask).tocsr() | ||

| self.rough_mask.eliminate_zeros() | ||

| self.source_mask = self.source_mask.multiply(pixel_mask).tocsr() | ||

| self.source_mask.eliminate_zeros() | ||

| self.uncontaminated_source_mask = self.uncontaminated_source_mask.multiply( | ||

| pixel_mask | ||

| ).tocsr() | ||

| self.uncontaminated_source_mask.eliminate_zeros() |

There was a problem hiding this comment.

this is great, never thought on including the sat/bright pixel mask into the machine mask, this way we can keep all original pixels and do nice image plots

😃

|

|

||

|

|

||

| def _combine_A(A, poscorr=None, time=None): | ||

| def _combine_A(A, time, poscorr=None): |

There was a problem hiding this comment.

this one will disappear after merging with perturbation API

| ) | ||

|

|

||

|

|

||

| def _find_uncontaminated_pixels(mask): |

There was a problem hiding this comment.

I'm not convinced this one belongs here, it used t be a hidden method of machine.py

Extracted out of #54, this is just a slightly more robust version of this part of the code.

|

I isolate the efficient FFI changes and open a new PR #71. We'll keep this PR open for future reference when including the in-place operations and new source mask methods. |

This PR refactors a lot of our API to make sure we have no in-place centroids. Here are some top changes:

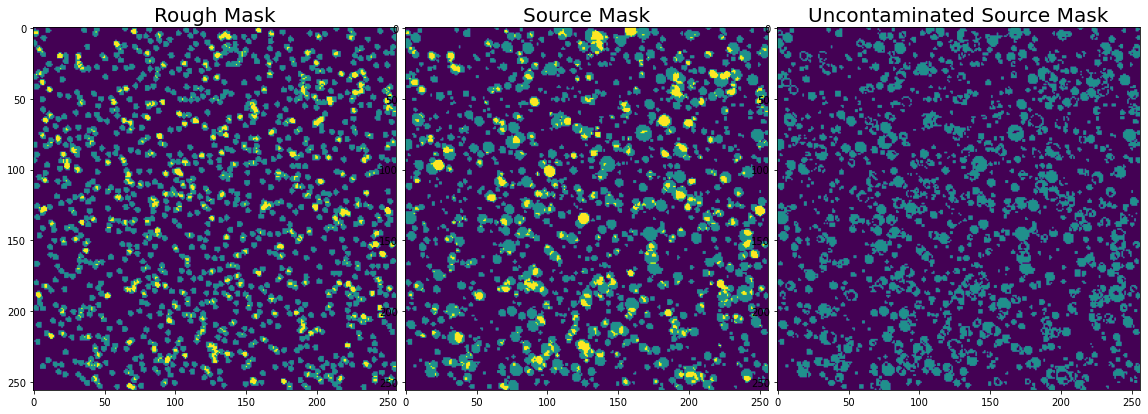

rough_mask, which is our first pass at the maskingsource_maskis now made in a slightly more robust and clear wayrtolto clip out faint parts of the PSF. We just use theatol, i.e. where the PSF has counts greater than some value.rough_mask,source_mask,uncontaminated_source_mask) these areFalse.This PR supersedes #48

To Do